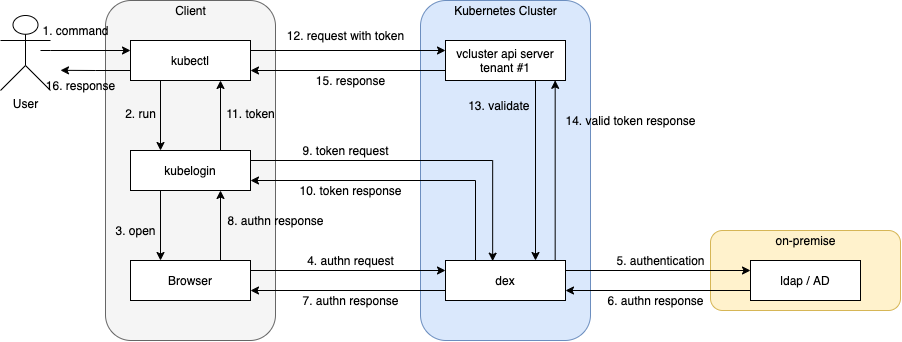

We set up our Vcluster but now we want to give proper access to the developers by leveraging their existing ldap credentials. The idea is to use DEX as a federated openid provider and kubelogin as a plugin for oidc integration.

Scenario

We’re going to achieve something like this: the user issues a command targeting our vcluster’s api server (kubectl get pods, for example), kubelogin will open the default browser on the user’s machine and display a login page. Then, after inserting the ldap credentials, dex will forward the credentials to the LDAP server which will return ok/ko. In case the credentials are valid, a bearer token will be returned to the user (under the hood, by kubelogin) which will forwarded to the apiserver for validation using the public key. If the token is ok then the command will execute succefully and display the kubectl output.

We will end up by having then two endpoints:

- https://vcluster-auth.justinpolidori.it for dex

- https://tenant-1-vcluster.justinpolidori.it for the tenant #1 vcluster api server.

I am going to use HostAliases for this demo. (Then you want to get rid of these fields and register your own dns names).

Dex will be deployed on the host cluster so it will be available to every vcluster we will decide to deploy on it.

Authorization will be managed by using Kubernetes' rbac.

Certificates

For this activity we’re going to generate our own CA:

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = *.justinpolidori.it

In this case it will be valid for every subdomain for justinpolidori.it and we will pass the CA to the vcluster’s api server and the certificate to dex. You can generate them by using this script (change the parameters based on your needs. For ex. validity)

For the sake of this demo I am going to rename the ca to openid-ca.pem, the certificate to certificate.pem and the key to key.pem.

Dex

Once we have the certificates we can start to craft our dex deployment. For this use case Dex needs to be set up with https enabled.

Modify the following configmap accordingly to your needs / scenario:

kind: ConfigMap

apiVersion: v1

metadata:

name: dex

namespace: dex

data:

config.yaml: |

issuer: https://vcluster-auth.justinpolidori.it

storage:

type: memory

# config:

# inCluster: true

web:

https: 0.0.0.0:5556

tlsCert: /etc/dex/tls/certificate.pem

tlsKey: /etc/dex/tls/key.pem

connectors:

- type: ldap

name: OpenLDAP

id: ldap

config:

# 1) Plain LDAP, without TLS:

host: myldaphost.it

insecureNoSSL: false

insecureSkipVerify: true

#

# 2) LDAPS without certificate validation:

#host: localhost:636

#insecureNoSSL: false

#insecureSkipVerify: true

#

# 3) LDAPS with certificate validation:

#host: YOUR-HOSTNAME:636

#insecureNoSSL: false

#insecureSkipVerify: false

#rootCAData: 'CERT'

# ...where CERT="$( base64 -w 0 your-cert.crt )"

# This would normally be a read-only user.

bindDN: <BIND DN>

bindPW: <LDAP SERVER PASSWORD>

usernamePrompt: LDAP username

userSearch:

baseDN: <YOUR BASE DN>

filter: "(objectClass=user)"

username: sAMAccountName

# "DN" (case sensitive) is a special attribute name. It indicates that

# this value should be taken from the entity's DN not an attribute on

# the entity.

idAttr: sAMAccountName

emailAttr: mail

nameAttr: cn

groupSearch:

baseDN: <YOUR BASE DN>

filter: "(objectClass=group)"

userMatchers:

# A user is a member of a group when their DN matches

# the value of a "member" attribute on the group entity.

- userAttr: DN

groupAttr: member

# The group name should be the "cn" value.

nameAttr: cn

staticClients:

- id: kubernetes

redirectURIs:

- 'http://localhost:8000'

name: 'Kubernetes'

secret: aGVsbG9fZnJpZW5kCg==

Basically we’re telling to dex that will be exposed on the host https://vcluster-auth.justinpolidori.it, to run in https by providing our own certificates and then the LDAP configuration. At the end we also specify a static client for our clients: a secret encoded in base64 which will be then placed on the kubeconfig we will share with our kubernetes tenants.

Then we create the dex’s resources such as clusterrolebinding, service account and deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: dex

name: dex

namespace: dex

spec:

replicas: 1

selector:

matchLabels:

app: dex

template:

metadata:

labels:

app: dex

spec:

serviceAccountName: dex

hostAliases:

- ip: "<PUT YOUR LB'S IP HERE>"

hostnames:

- "vcluster-auth.justinpolidori.it"

containers:

- image: ghcr.io/dexidp/dex:v2.30.0

name: dex

command: ["/usr/local/bin/dex", "serve", "/etc/dex/cfg/config.yaml"]

ports:

- name: https

containerPort: 5556

volumeMounts:

- name: config

mountPath: /etc/dex/cfg

- name: dex-openid-certs

mountPath: /etc/dex/tls

resources:

limits:

cpu: 300m

memory: 100Mi

requests:

cpu: 300m

memory: 100Mi

readinessProbe:

httpGet:

path: /healthz

port: 5556

scheme: HTTPS

volumes:

- name: config

configMap:

name: dex

items:

- key: config.yaml

path: config.yaml

- name: dex-openid-certs

secret:

secretName: dex-openid-certs

---

apiVersion: v1

kind: Service

metadata:

name: dex

namespace: dex

spec:

type: ClusterIP

ports:

- name: https

port: 5556

protocol: TCP

targetPort: 5556

selector:

app: dex

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: dex

name: dex

namespace: dex

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: dex

rules:

- apiGroups: ["dex.coreos.com"] # API group created by dex

resources: ["*"]

verbs: ["*"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["create"] # To manage its own resources, dex must be able to create customresourcedefinitions

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dex

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: dex

subjects:

- kind: ServiceAccount

name: dex # Service account assigned to the dex pod, created above

namespace: dex # The namespace dex is running in

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dex

namespace: dex

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-buffer-size: "8k" # if a user, returned from dex, has too many groups, you can adjust this value in case.

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

#nginx.ingress.kubernetes.io/ssl-passthrough: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

rules:

- host: vcluster-auth.justinpolidori.it

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: dex

port:

number: 5556

tls:

- hosts:

- vcluster-auth.justinpolidori.it

secretName: dex-openid-cert-tls

As Dex and ingress above need to use the certificate, we’re going to provide it:

kubectl create secret generic dex-openid-certs --from-file=key.pem=./key.pem --from-file=key.pem=./key.pem -oyaml --dry-run=client > dex-openid-certs.yaml

kubectl create secret tls dex-openid-cert-tls --from-file=key.pem=./key.pem --from-file=key.pem=./key.pem -oyaml --dry-run=client > dex-openid-cert-tls.yaml

apiVersion: v1

data:

certificate.pem: <redacted>

key.pem: <redacted>

kind: Secret

metadata:

name: dex-openid-certs

So it’s time to apply these resources on the host cluster.

Vcluster

At this point we need to customize our vcluster’s values.yaml from the previous post; we need to generate the base64 for the ca and add a couple of parameters to the k3s apiserver:

vcluster:

image: rancher/k3s:v1.23.3-k3s1

command:

- /bin/k3s

extraArgs:

- --service-cidr=10.96.0.0/16 # this is equal to the service CIDR from the host cluster

- --kube-apiserver-arg oidc-issuer-url=https://vcluster-auth.justinpolidori.it

- --kube-apiserver-arg oidc-client-id=kubernetes

- --kube-apiserver-arg oidc-ca-file=/var/openid-ca.pem

- --kube-apiserver-arg oidc-username-claim=email

- --kube-apiserver-arg oidc-groups-claim=groups

volumeMounts:

- mountPath: /data

name: data

- mountPath: /var

name: my-ca

readOnly: true

resources:

limits:

memory: 2Gi

requests:

cpu: 200m

memory: 256Mi

volumes:

- name: my-ca

secret:

secretName: my-ca

[...]

kubectl create secret generic my-ca --from-file=openid-key.pem=./openid-key.pem --from-file=openid-ca.pem=./openid-ca.pem -oyaml --dry-run=client > my-ca.yaml

Basically we’re telling to the apiserver that the token issuer is on vcluster-auth.justinpolidori.it, the client id which we specified on dex, where to find the CA and then two important parameters:

- odic-username-claim: the field which will be passed to Kubernetes about the user’s identity. In this case we will use the user’s email.

- oidc-group-claim: we will propagate the user’s ldap groups to Kubernetes from the token’s claims.

Both can be used in the rbac definitions: we can target both the user’s email and the user’s ldap group(s).

Time to deploy.

helm upgrade --install tenant-1 vcluster --values vcluster.yaml --repo https://charts.loft.sh --namespace tenant-1 --repository-config=''

Once the vcluster is up and running we can try to login through dex but first of all we need to craft the kubeconfig.

Kubeconfig

Our kubeconfig will look like this:

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: https://tenant-1-vcluster.justinpolidori.it

name: tenant1-vcluster

contexts:

- context:

cluster: tenant1-vcluster

namespace: default

user: oidc-user

name: tenant1-vcluster

current-context: tenant1-vcluster

kind: Config

preferences: {}

users:

- name: oidc-user

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- oidc-login

- get-token

- --oidc-issuer-url=https://vcluster-auth.justinpolidori.it

- --oidc-client-id=kubernetes

- --oidc-client-secret=aGVsbG9fZnJpZW5kCg==

- --oidc-extra-scope=profile

- --oidc-extra-scope=email

- --oidc-extra-scope=groups

- --certificate-authority-data=<OPENID-CA.PEM-BASE64-ENCODED>

command: kubectl

env: null

interactiveMode: IfAvailable

provideClusterInfo: false

It contains the address to the api-server we created in the previous post and the oidc parameters such as the dex’s url, secret and the CA base64 encoded.

Test the flow.

kubectl get pods --kubeconfig ./kubeconfig-oidc.yaml

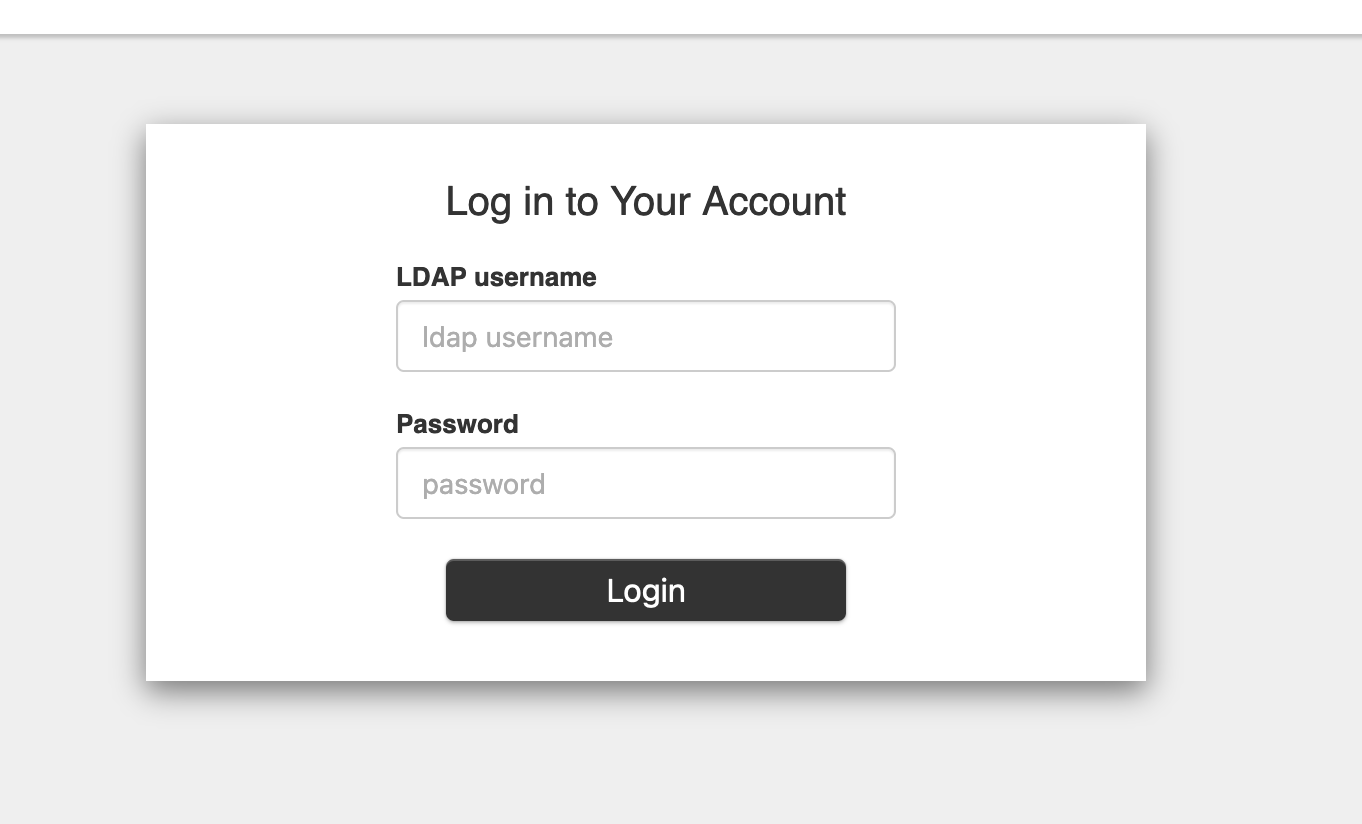

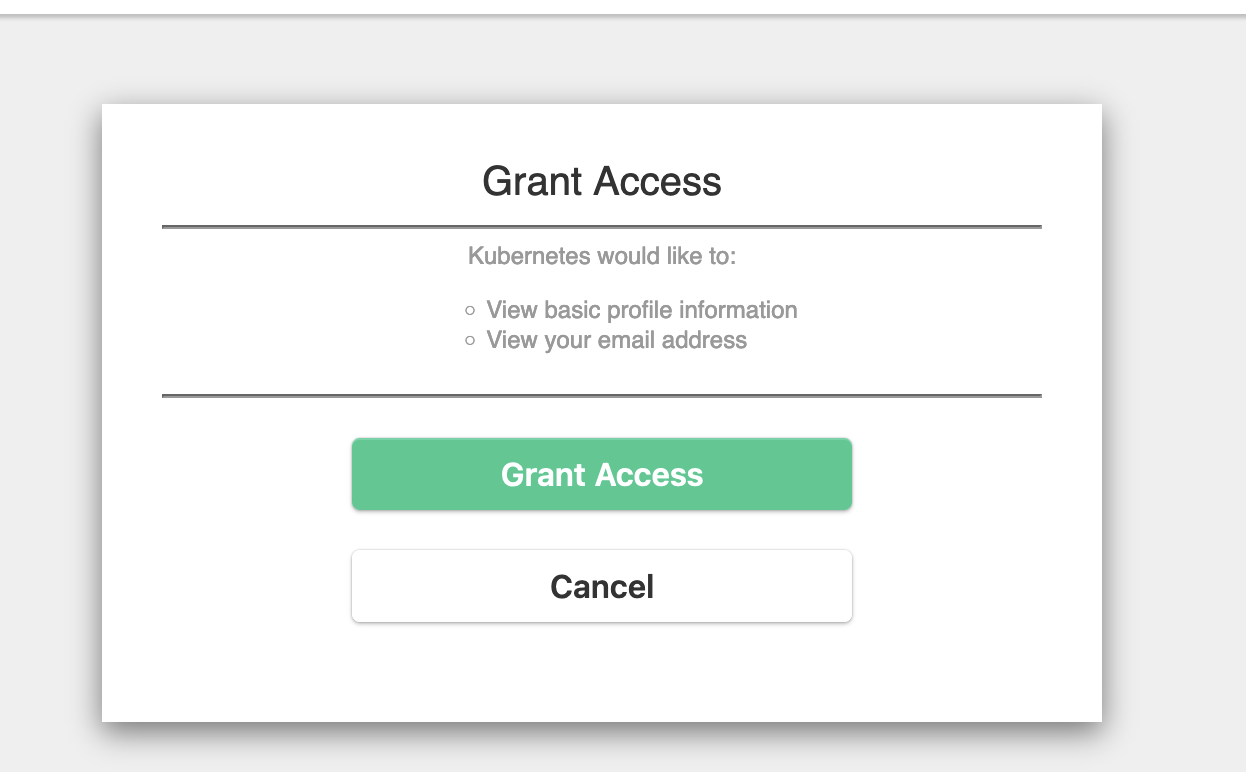

It will open the default browser

then you can go back on your terminal but unfortunately it will show you that you’re not authorized to get pods in the default namespace. That’s because we haven’t created the proper rbac resources yet.

Let’s craft our clusterrolebinding.yaml. We can target a user or a group:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: oidc-admin-group

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: My-Ldap-group

or

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: oidc-admin-group

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: myemail@justinpolidori.it

In order to apply the resource we need to login first on the vcluster. We can do this by getting the admin credential by using the vcluster cli. Note that in this case we’re not going through the flow we just built but is needed for the rbac setup.

vcluster connect tenant1 -n tenant1 --server=https://tenant-1-vcluster.justinpolidori.it --service-account admin --cluster-role cluster-admin --insecure

It will generate an admin kubeconfig which can be used to deploy the clusterrolebinding.yaml file.

kubectl apply -f clusterrolebinding.yaml --kubeconfig ./kubeconfig.yaml # <- this is the kubeconfig generated by the vcluster command above!

Then go back to our original kubeconfig and issue the command again

> kc get pods --kubeconfig ./kubeconfig.yaml

No resources found in default namespace.

It worked!

Conclusion

By leveraging kubelogin, dex and k3s we managed to provide our vcluster’s users a simple and integrated way to access the environment. By having a central dex installed we can use it for multiple tenants/vclusters without having to deploy multiple instances. The code above can be optimized in many ways, for example you can change dex’s backend store and/or deploy cert-manager.