We built CI/CD pipelines so far which have Docker images as output but how we make sure about the provenance of the workload we run on Kubernetes? How can be sure that the containers we are running are run from images built from our pipelines?

One way to ensure trust with Docker images is to sign these images. We can sign them during our CI pipeline and then verify the signature at runtime when deploying. In this way we (and the user performing the action in case) can be notified if there is an attempt to deploy an image with a wrong/non-existing signature and block it.

Sign Images with Cosign

In order to sign images I make use of Cosign; with cosign we can sign images just by doing

1. Generate a set of keys

cosign generate-key-pair

2. Sign the image

cosign sign --key cosign.key my-registry/my-image:1.0.0

You can also add custom key/value labels to the sign command with the flag -a (for example you can include in the image signature the build timestamp, the user who created the image etc.)

3. Verify

cosign verify --key cosign.pub my-registry/my-image:1.0.0

The following checks were performed on these signatures:

- The cosign claims were validated

- The signatures were verified against the specified public key

{"Critical":{"Identity":{"docker-reference":""},"Image":{"Docker-manifest-digest":"sha256:32de15e003azc24addz1970z8a73434e56cc962619605za8azza43cbae59cde3"},"Type":"cosign container image signature"},"Optional":null}

The output above tells us that the images has been verified and returned successfully (with exit code 0).

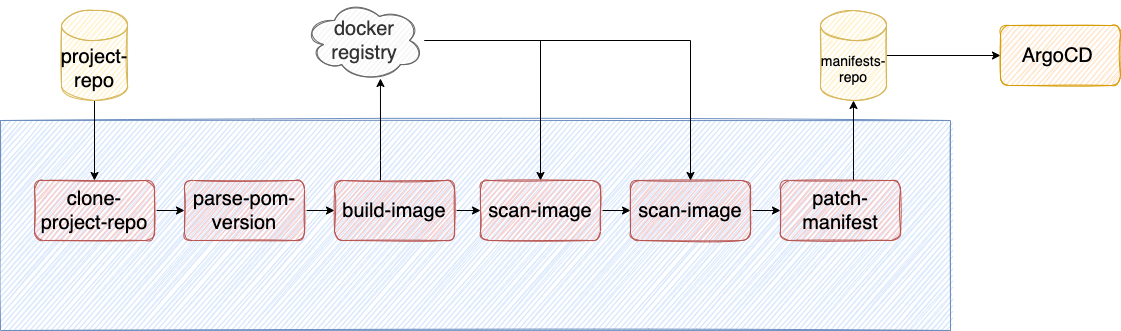

Since we want to automate this process (at least the sign and verify steps), we are going to implement the sign image into our Tekton pipeline. This step will be executed just before the ArgoCd’s trigger that will cause the image to be deployed inside a Kubernetes cluster.

apiVersion: tekton.dev/v1beta1

kind: ClusterTask

metadata:

name: cosign

labels:

app.kubernetes.io/version: "0.2"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/tags: docker.tools

spec:

description: >-

This Task can be used to sign a docker image.

params:

- name: image

description: Image name

steps:

- name: sign

image: gcr.io/projectsigstore/cosign:v1.4.1

args: ["sign", "--key" ,"/etc/keys/cosign.key","$(params.image)"]

env:

- name: COSIGN_PASSWORD

valueFrom:

secretKeyRef:

name: cosign-key-password

key: password

volumeMounts:

- name: cosign-keys

mountPath: "/etc/keys"

readOnly: true

volumes:

- name: cosign-keys

secret:

secretName: cosign-keys

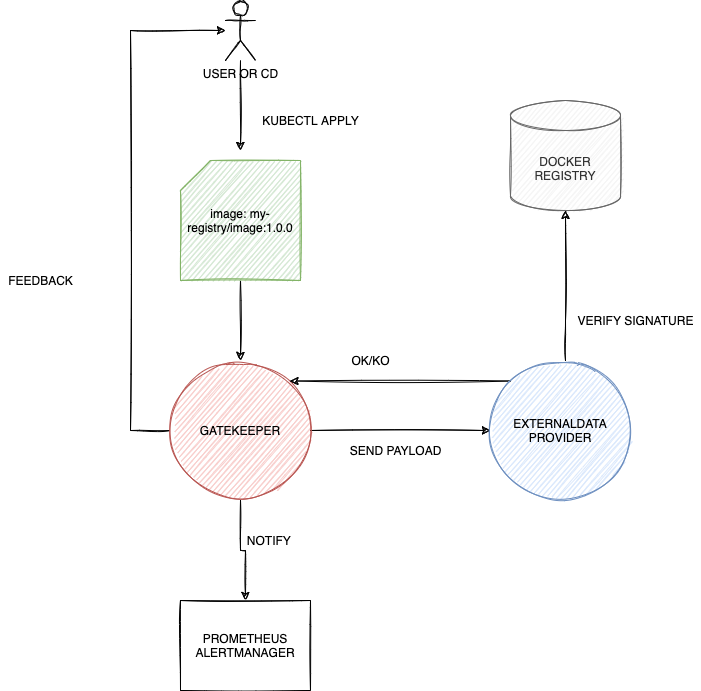

Verify signatures with OPA Gatekeeper

We have signed our image so far. So now how we enforce trust in our Kubernetes cluster? We can use Gatekeeper. OPA Gatekeeper is an admission webhook for Kubernetes that can enforce policies whenever a resource is created,updated or deleted.

For example Gatekeeper can be used to enforce that only pods with liveness/readiness probes can be deployed on a Kubernetes cluster or only pod that with non-root privileges are run on a cluster. Here there are a lot of use cases where you can take a look!

In Gatekeeper we have two main kinds of resources: ConstraintTemplate (where we define the logic of a rule) and Constraint (an instantiation of a ConstraintTemplate). Here an example of how to enforce resources to contain specified labels:

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

annotations:

description: >-

Requires resources to contain specified labels, with values matching

provided regular expressions.

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

validation:

openAPIV3Schema:

type: object

properties:

message:

type: string

labels:

type: array

description: >-

A list of labels and values the object must specify.

items:

type: object

properties:

key:

type: string

description: >-

The required label.

allowedRegex:

type: string

description: >-

If specified, a regular expression the annotation's value

must match. The value must contain at least one match for

the regular expression.

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

get_message(parameters, _default) = msg {

not parameters.message

msg := _default

}

get_message(parameters, _default) = msg {

msg := parameters.message

}

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_].key}

missing := required - provided

count(missing) > 0

def_msg := sprintf("you must provide labels: %v", [missing])

msg := get_message(input.parameters, def_msg)

}

violation[{"msg": msg}] {

value := input.review.object.metadata.labels[key]

expected := input.parameters.labels[_]

expected.key == key

# do not match if allowedRegex is not defined, or is an empty string

expected.allowedRegex != ""

not re_match(expected.allowedRegex, value)

def_msg := sprintf("Label <%v: %v> does not satisfy allowed regex: %v", [key, value, expected.allowedRegex])

msg := get_message(input.parameters, def_msg)

}

Then we instantiate the ConstraintTemplate above by creating its Constraint.

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: all-must-have-owner

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

message: "All namespaces must have an `owner` label that points to your company email"

labels:

- key: owner

allowedRegex: "^[a-zA-Z]+.@mycompany.it$"

So here we’re just creating a rule that will require each namespaces to have a label owner which needs to be populate with the owner’s email..

So after installing Gatekeeper we can start to create policies for our use case: disallow the deployment of unstrusted docker images.

In order to do so we will use a new feature from Gatekeeper: External Data. This will enable Gatekeeper to interact with external providers such as custom application developed by third parties. In this case we will deploy an application that performs validation of docker images with cosign.

So we need to enable this feature by passing –enable-external-data to the Gatekeeper’s deployment and Gatekeeper Audit’s deployment (refer to Gatekeeper installation parameters).

Let’s create the ConstraintTempalte

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8sexternaldatacosign

spec:

crd:

spec:

names:

kind: K8sExternalDataCosign

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8sexternaldata

violation[{"msg": msg}] {

# used on kind: Deployment/StatefulSet/DaemonSet

# build a list of keys containing images

images := [img | img = input.review.object.spec.template.spec.containers[_].image]

# send external data request. We specifiy the provider as "cosign-gatekeeper-provider"

response := external_data({"provider": "cosign-gatekeeper-provider", "keys": images})

response_with_error(response)

msg := sprintf("invalid response: %v", [response])

}

violation[{"msg": msg}] {

# used on kind: Pod

# build a list of keys containing images

images := [img | img = input.review.object.spec.containers[_].image]

# send external data request

response := external_data({"provider": "cosign-gatekeeper-provider", "keys": images})

response_with_error(response)

msg := sprintf("invalid response: %v", [response])

}

response_with_error(response) {

count(response.errors) > 0

errs := response.errors[_]

contains(errs[1], "_invalid")

}

response_with_error(response) {

count(response.system_error) > 0

}

Here we just demanding to an external entity to do the image signature review with the command

response := external_data({"provider": "cosign-gatekeeper-provider", "keys": images})

Then we can create the Constraint

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sExternalDataCosign

metadata:

name: cosign-gatekeeper-provider

spec:

enforcementAction: deny

match:

namespaces:

- namespace-1

- namespace-2

- namespace-3

kinds:

- apiGroups: ["apps",""]

kinds: ["Deployment","Pod","StatefulSet","DaemonSet"]

Basically here we’re saying to apply the restriction for Deployments,Pods,Statefulsets and DaemonSets but only for particular namespaces.

External Data with Cosign Gatekeeper Provider

At this point we need to deploy our External Data provider. We can start by cloning this repository and then modify it in order to load our keys from Kubernetes and to login to our registry:

package main

import (

"context"

"encoding/json"

"fmt"

"log"

"net/http"

"path/filepath"

"io/ioutil"

"crypto/tls"

"strings"

"io/ioutil"

"github.com/google/go-containerregistry/pkg/name"

"github.com/julienschmidt/httprouter"

"github.com/sigstore/cosign/pkg/cosign"

"github.com/open-policy-agent/frameworks/constraint/pkg/externaldata"

"github.com/google/go-containerregistry/pkg/authn"

"github.com/google/go-containerregistry/pkg/v1/remote"

)

type ImageVerificationReq struct {

Image string

}

type ImageVerificationResp struct {

Verified bool `json:"verified"`

VerificationMessage string `json:"verification_message"`

}

const (

apiVersion = "externaldata.gatekeeper.sh/v1alpha1"

)

func Verify(w http.ResponseWriter, req *http.Request, ps httprouter.Params) {

requestBody, err := ioutil.ReadAll(req.Body)

fmt.Println(requestBody)

if err != nil {

http.Error(w, err.Error(), http.StatusBadRequest)

sendResponse(nil, fmt.Sprintf("unable to read request body: %v", err), w)

return

}

var providerRequest externaldata.ProviderRequest

err = json.Unmarshal(requestBody, &providerRequest)

fmt.Println(providerRequest)

if err != nil {

http.Error(w, err.Error(), http.StatusBadRequest)

sendResponse(nil, fmt.Sprintf("unable to unmarshal request body: %v", err), w)

return

}

results := make([]externaldata.Item, 0)

resultsFailedImgs := make([]string, 0)

ctx := context.TODO()

wDir, err := os.Getwd()

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

// Load cosign's public key which will be used to verify signatures

pub, err := cosign.LoadPublicKey(ctx, filepath.Join(wDir, "cosign.pub"))

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

regUsernameByte, err := ioutil.Readfile("/etc/registry-secret/username")

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

regPasswordByte, err := ioutil.Readfile("/etc/registry-secret/password")

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

regUsername := string(regUsernameByte)

regPassword := string(regPasswordByte)

// Authenticate to our registry by getting user/pswd from the container environment variable

co := &cosign.CheckOpts{

SigVerifier: pub,

RegistryClientOpts: []remote.Option{

remote.WithAuth(&authn.Basic{

Username: regUsername,

Password: regPassword,

}),

},

}

for _, key := range providerRequest.Request.Keys {

ref, err := name.ParseReference(key)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

if _, err = cosign.Verify(ctx, ref, co); err != nil {

results = append(results, externaldata.Item{

Key: key,

Error: key + "_invalid", // You can customize the error message here in case of failure

})

resultsFailedImgs = append(resultsFailedImgs, key)

fmt.Println("error: ", err)

} else {

results = append(results, externaldata.Item{

Key: key,

Value: key + "_valid", // You can customize the message here in case of validation

})

}

fmt.Println("result: ", results)

}

sendResponse(&results, resultsFailedImgs, "", w)

}

func sendResponse(results *[]externaldata.Item, resultsFailedImgs []string, systemErr string, w http.ResponseWriter) {

response := externaldata.ProviderResponse{

APIVersion: apiVersion,

Kind: "ProviderResponse",

}

if results != nil {

response.Response.Items = *results

} else {

response.Response.SystemError = systemErr

}

w.WriteHeader(http.StatusOK)

if err := json.NewEncoder(w).Encode(response); err != nil {

panic(err)

}

}

func main() {

router := httprouter.New()

router.POST("/validate", Verify)

log.Fatal(http.ListenAndServe(":8090", router))

}

Then we can build it and deploy it as a deployment alongside Gatekeeper.

apiVersion: v1

kind: Namespace

metadata:

name: cosign-gatekeeper-provider

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cosign-gatekeeper-provider

namespace: cosign-gatekeeper-provider

spec:

replicas: 1

selector:

matchLabels:

app: cosign-gatekeeper-provider

template:

metadata:

labels:

app: cosign-gatekeeper-provider

spec:

containers:

- image: my-resgistry/cosign-gatekeeper-provider:1.0.0

imagePullPolicy: Always

name: cosign-gatekeeper-provider

ports:

- containerPort: 8090

protocol: TCP

volumeMounts:

- name: public-key # Mount the cosign's public key

mountPath: /cosign-gatekeeper-provider/cosign.pub

subPath: cosign.pub

readOnly: true

- name: registry-secret

mountPath: /etc/registry-secret

readOnly: true

restartPolicy: Always

volumes:

- name: public-key

secret:

secretName: cosign-public-key

- name: registry-secret

secret:

secretName: registry-secret

---

apiVersion: v1

kind: Service

metadata:

name: cosign-gatekeeper-provider

namespace: cosign-gatekeeper-provider

spec:

ports:

- port: 8090

protocol: TCP

targetPort: 8090

selector:

app: cosign-gatekeeper-provider

Then we will define this application as an External Data provider for Gatekeeper

apiVersion: externaldata.gatekeeper.sh/v1alpha1

kind: Provider

metadata:

name: cosign-gatekeeper-provider

namespace: gatekeeper-system

spec:

url: http://cosign-gatekeeper-provider.cosign-gatekeeper-provider:8090/validate

timeout: 30

And we’re done! Now if you try to deploy a container with a non-signed image we should get an error.

kubectl run nginx --image=nginx -n namespace-1

Warning: [cosign-gatekeeper-provider] invalid response: {"errors": [["nginx", "nginx_invalid"]], "responses": [], "status_code": 200, "system_error": ""}

In conclusion

By leveraging Gatekeeper + Cosign for image signature validation with the new external_data feature we were able to disallow untrusted docker images on our Kubernetes cluster; moreover by integrating Cosign in our Tekton Pipeline we were able to automate images signature in our CI process.

Keep in mind that in this way we will prevent all the Docker Images to run if not signed by us. Another idea would be to set the constraint with enforcementAction: warn which will behave as the deny option but any error will be counted as failed validation (and you can receive a notification from Prometheues Alertmanager for example) instead of blocking the deployment.

In conclusion the golang code above can be improved further: we just have a single func() verify that does all the work. We can start by wrapping the authentication to the private registry in another func() for example.