In this post I want to summarize some important Kafka producer’s configurations that usually are not taken in consideration when dealing with Kafka.

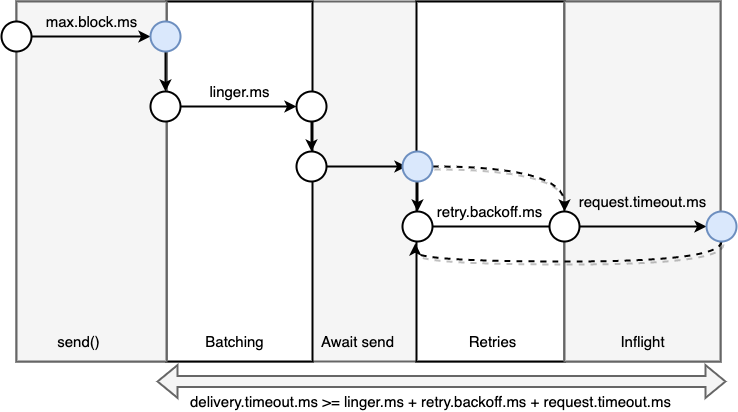

- max.block.ms: This timeout controls how long the producer may block when entering the send() method. This configuration provides an upper bound timeout for time spent waiting for metadata from the broker. Hence this timeout can be triggered when the producer’s send buffer is full or when topic metadata is not available.

- linger.ms: this configuration controls the batching (also with batch.size); basically, says “wait this amount of time for additional messages before sending the current batch”. So we can instruct the producer to wait few milliseconds to add other messages to the batch before sending it to Kafka.

- retry.backoff.ms: this configuration is controls how much time the producer will wait between consecutive retries (configured by the retries configuration). By default is 100ms. Nowdays is not recommended to set it but instead try to simulate a broker crash and then use the delivery.timeout.ms based on how much did it take to recover from it, otherwise the producer could give up too soon. Usually this increases the latency and is reasonable to use it when using compression.

- request.timeout.ms: this configuration controls how log the producer waits for a reply from a broker when sending data. It does not include retries and kicks in on each producer request. A TimeoutException will be thrown in case the configured value is reached.

- delivery.timeout.ms: this timeout kicks in starting from when a record is ready to be sent, so the send() got all the needed metadata. It can trigger the timeout when the RTT is > of the configured value and if so the send()’s callback will be called. As you can see from the picture, it needs to be configured with a value greater then the sum of linger.ms, retry.backoff.ms and request.timeout.ms

Kafka’s producer performance is heavily influenced by other parameters, such as:

- compression.type: Kafka allows to compress messages to save disk space and bandwidth by using more CPU cycles. Compression types supported are: GZIP, Snappy, Lz4, Zstd

- batch.size: this configuration kicks in when sending messages to the same partiton (so eventually with the same key). This parameter sets the amount of memory in bytes that will be used for each batch. When the treshold is reached then the batch will be sent.

- buffer.memory: memory reserved for the producer’s buffer messages waiting to be sent to the brokers.

- max.in.flight.requests.per.connection: this configuration controls how many batches the producer will send to the brokers without receiving responses (unacknowledged requests). It can improve the throughput by using more memory. Keep in mind that setting this value > 1 can mix the order of the messages as they’re written to Kafka after a failed send due to retries (if enabled).

- max.request.size: If set to 1MB, the largest message a producer can send is 1MB or 1024 messages of 1KB each in a request.

- receive.buffer.bytes + send.buffer.bytes: size of TCP send/receive buffers used by the sockets. Usually these settings are tweaked when dealing with multi DC scenarios.

- enable.idempotence: enable the exactly-once semantics. This requires to set max.in.flight.requests.per.connection <=5, retries>0 and acks=all otherwise a ConfigException will be thrown.