I have been using Tekton since the last year and it amazed me for how much is easy to bootstrap CI pipelines with it.

I will not go deep about how Tekton works (take a look at the documentation here ), but it’s important to mention that it executes your CI code in isolated Docker containers: for example, if your pipeline is composed by the steps “git-clone” and “docker-build”, each of these steps will execute its code inside a container, so for the first step we can use the alpine/git Docker image and for the docker-build we can use gcr.io/kaniko-project/executor, no plugins involved.

Tekton Pipelines is made up by two main concepts:

- Pipelines: a CRD that specifies parameters that are passed down to the tasks the composes the pipeline

- Tasks: a single indipendent reusable unit which will execute an action taking as input the parameters from the pipeline. They can have multiple steps and each one will be executed after the previous one. Each step will make use of a docker image to perform its job.

My Pipeline

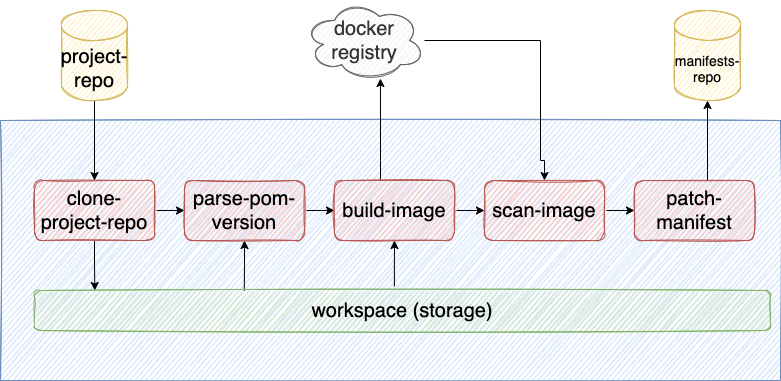

For Spring Boot projects I usually have this flow:

- A user commit and push its code on a project

- A webhook (configured on the project) will trigger Tekton that will clone the project and get out some metadata such as artifact name and artifact version

- Based on the values above, it builds the Docker Image 1:1 with the name and version of the pom.xml

- A Trivy scan is peformed

- Clone the repo where I have all the manifests (Deployment/Services etc) and patch the deployment.yaml of the project Tekton is building; the CI is completed.

After the last step specified above, ArgoCD will take place and deploy the updated application on a target cluster. This allows us to follow a gitops approach where the desired manifests state are always up to date on the repository and continuously deploy changes thanks to Argo.

Setup

Tekton can be deployed on a Kubernetes cluster by applying the following manifests:

- Tekton Pipelines (Tekton core, which provides the Pipeline CRD, Task CRD):

kubectl apply --filename https://storage.googleapis.com/tekton-releases/pipeline/latest/release.yaml

- Tekton Triggers (This resource enables us to create event based pipelines):

kubectl apply --filename https://storage.googleapis.com/tekton-releases/triggers/latest/release.yaml

kubectl apply --filename https://storage.googleapis.com/tekton-releases/triggers/latest/interceptors.yaml

- Tekton Dashboard:

kubectl apply --filename https://storage.googleapis.com/tekton-releases/dashboard/latest/tekton-dashboard-release.yaml

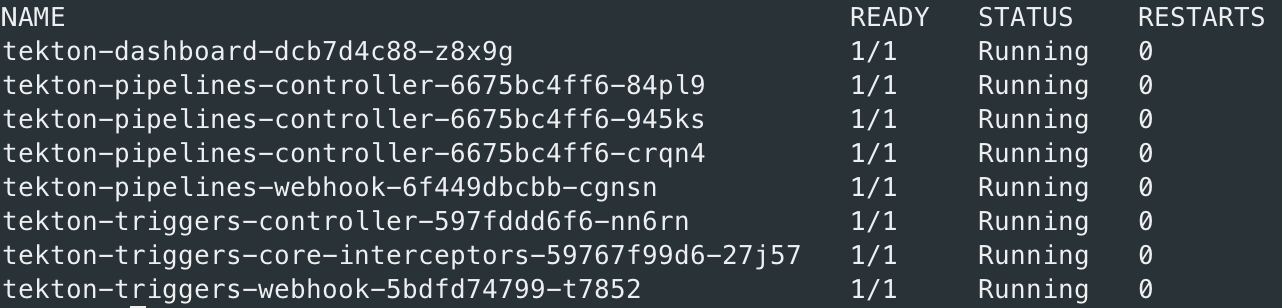

At the end we gonna end up having the following pods running inside the tekton-pipelines namespace:

Pipeline

The pipeline that I will show, it’s being used to build spring boot based application. I made use of some already created tasks that you can find in the community catalog. Here you can find reusable tasks where you can start from (and edit them based on your needs).

As you can see the idea is to clone the project and save it in a storage: the workspace which allows Tekton to share files/resources between tasks, in this case our project code. The task that I use to clone is the one from the tekton catalog. Then, since we need to build a Docker image with the same name and version as the project we just cloned, we need to get out from the pom.xml the artifactId and the artifactVersion (the docker image will be 1:1 to the project we cloned: myregistry/myteam/artifactId:artifactVersion). In order to achive that we create this task

apiVersion: tekton.dev/v1beta1

kind: ClusterTask

metadata:

name: parse-pom-version

labels:

app.kubernetes.io/version: "0.2"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/tags: build-tool

spec:

description: >-

This Task can be used to parse the pom.xml in order to get artifact-name and artifact-version

workspaces:

- name: git-repo

description: The workspace consisting of maven project.

params:

- name: maven-image

type: string

description: Maven base image

default: maven-adoptopenjdk-11:3.6

type: string

default: "http"

- name: context-dir

type: string

description: >-

The context directory within the repository for git-repos on

which we want to execute maven goals.

default: "."

- name: directory

type: string

results:

- name: artifact-name

description: Artifact name

- name: artifact-version

description: Artifact version

steps:

- name: mvn-image-to-build

image: $(params.maven-image)

workingDir: $(workspaces.git-repo.path)/$(params.directory)/$(params.context-dir)

script: |

readonly ARTIFACTID="$(/usr/bin/mvn org.apache.maven.plugins:maven-help-plugin:3.1.1:evaluate -Dexpression='project.artifactId' -q -DforceStdout)"

readonly VERSION="$(/usr/bin/mvn org.apache.maven.plugins:maven-help-plugin:3.1.1:evaluate -Dexpression='project.version' -q -DforceStdout)"

echo -n "$ARTIFACTID" | tee $(results.artifact-name.path)

echo -n "$VERSION" | tee $(results.artifact-version.path)

Starting from the tasks creation instead of the pipeline allows us to focus to create reusable tasks and then use them to achieve the desidered pipeline. So from what you can see above we’re defining a ClusterTask (so usable in every namespace inside the Kubernetes cluster) that accepts 3 parameters:

- Maven Image: the docker image (in this case maven) we will use in this single step Task.

- context-dir: The context directory within the repository for git-repos on which we want to execute maven goals.

- directory: a subpath where we expect the project folder.

- workspaces: we expect that this task will read the cloned

Then we declare that this task will produce 2 results: the actual artifact-name and artifact-version I was talking before. Results are like variables we can use to expose a task’s output to other tasks.

Then we come to the core of the task: the steps section and in this case we have only one that given the docker image from the maven-image param, given the directory where we expect the project inside the storage, it executes the script specified; basically we are parsing the pom.xml from the project, get out the specified values and then put these values inside the results variable. The workingDir specifies the folder where we expect the project we just cloned before.

Then we come to the task that will build the project and produce the Docker image: Kaniko

apiVersion: tekton.dev/v1beta1

kind: ClusterTask

metadata:

name: kaniko

labels:

app.kubernetes.io/version: "0.1"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/tags: image-build

spec:

description: >-

This Task builds git-repo into a container image using Google's kaniko tool.

Kaniko doesn't depend on a Docker daemon and executes each

command within a Dockerfile completely in userspace. This enables

building container images in environments that can't easily or

securely run a Docker daemon, such as a standard Kubernetes cluster.

params:

- name: IMAGE

description: Name (reference) of the image to build.

- name: DOCKERFILE

description: Path to the Dockerfile to build.

default: ./Dockerfile

- name: CONTEXT

description: The build context used by Kaniko.

default: ./

- name: CONTEXT-SUB-PATH

description: The build context used by Kaniko.

default: target/

- name: ARTIFACT-NAME

description: Artifact name

- name: ARTIFACT-VERSION

description: Artifact version

- name: BUILDER_IMAGE

description: The image on which builds will run

default: gcr.io/kaniko-project/executor:v1.5.2-debug

- name: TARGET

description: specifies what docker stage to execute

default: production

- name: revision

type: string

- name: project-name

type: string

- name: project-namespace

type: string

- name: cache-repo

type: string

default: ""

description: "nexus-hosted pam npm repository url"

- name: LIBRARY_NAMES

type: array

default: ["excluded projects"]

description: Array of library names to be ignored during kaniko push

- name: commit-sha

type: string

default: "00000000000000000000"

description: "commit sha of the image"

workspaces:

- name: git-repo

results:

- name: IMAGE-DIGEST

description: Digest of the image just built.

steps:

- name: build-and-push

workingDir: $(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)

image: $(params.BUILDER_IMAGE)

# specifying DOCKER_CONFIG is required to allow kaniko to detect docker credential

# https://github.com/tektoncd/pipeline/pull/706

env:

- name: DOCKER_CONFIG

value: /tekton/home/.docker

# execute docker build: skip test stage when pushing on feature/release (--skip-unused-stages).

# TARGET (as for now, definied inside trigger binding) is always 'production'.

# we always push the docker image but that image comes from the production stage.

args: ["$(params.LIBRARY_NAMES[*])"]

script: |

#!/busybox/sh

branch=$(params.revision)

build_ts=$(date)

if [ -z "${branch##*feature*}" ] || [ -z "${branch##*release*}" ]; then

EXTRA_ARGS="--skip-unused-stages"

fi

for NAME in $@; do if [ "$(params.ARTIFACT-NAME)" = "$NAME" ]; then EXTRA_ARGS="$EXTRA_ARGS --no-push"; break; fi; done;

/kaniko/executor \

$EXTRA_ARGS \

--dockerfile=$(params.DOCKERFILE) \

--context=$(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)/$(params.CONTEXT)/$(params.CONTEXT-SUB-PATH) \

--build-arg=JAR_FILE=$(params.ARTIFACT-NAME)-$(params.ARTIFACT-VERSION).jar \

--build-arg=ARTIFACT_VERSION=$(params.ARTIFACT-VERSION) \

--build-arg=COMMIT_SHA=$(params.commit-sha) \

--build-arg=BUILD_TS="$build_ts" \

--destination=$(params.IMAGE) \

--oci-layout-path=$(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)/$(params.CONTEXT)/image-digest \

--cache=true \

--cache-dir=/image-cache \

--cache-repo=myregistry/myteam/kaniko-cache \

--target=$(params.TARGET)

# kaniko assumes it is running as root, which means this example fails on platforms

# that default to run containers as random uid (like OpenShift). Adding this securityContext

# makes it explicit that it needs to run as root.

securityContext:

runAsUser: 0

volumeMounts:

- name: image-cache

mountPath: /image-cache

- name: maven-settings

mountPath: /maven-settings

- name: write-digest

workingDir: $(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)

image: gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/imagedigestexporter:v0.16.2

# output of imagedigestexport [{"key":"digest","value":"sha256:eed29..660","regit-repoRef":{"name":"myrepo/myimage"}}]

command: ["/ko-app/imagedigestexporter"]

args:

- -images=[{"name":"$(params.IMAGE)","type":"image","url":"$(params.IMAGE)","digest":"","OutputImageDir":"$(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)/image-digest"}]

- -terminationMessagePath=$(params.CONTEXT)/image-digested

securityContext:

runAsUser: 0

volumeMounts:

- name: image-cache

mountPath: /image-cache

- name: digest-to-results

workingDir: $(workspaces.git-repo.path)/$(params.project-namespace)/$(params.project-name)/$(params.revision)

image: jq

script: |

cat $(params.CONTEXT)/image-digested | jq '.[0].value' -rj | tee /tekton/results/IMAGE-DIGEST

volumeMounts:

- name: image-cache

mountPath: /image-cache

volumes:

- name: image-cache

persistentVolumeClaim:

claimName: cache-claim

- name: maven-settings

configMap:

name: maven-settings

Given the workspace where the Spring Boot project has been cloned, this task will create the Docker image based on the Dockerfile and produce the sha256 as a results in order to (eventually) use it in other tasks. Note that this task is customized to my needs (referring to the one you can find inside the Tekton catalog). In my case I need to skip the docker image creation in case of building libraries and skip some stages in case we push on the feature/release branches. As you can see here I also make use of a cache to speed up builds: I mount a volume which kaniko will populate with the maven dependecies (.m2 folder). As reference, the Dockerfile that Kaniko uses is the one specified in the previous post

Then we come to the scan image task: here we use Trivy to scan the docker image (and the Jar produced by the build before) for vulnerabilities.

apiVersion: tekton.dev/v1beta1

kind: ClusterTask

metadata:

name: trivy

labels:

app.kubernetes.io/version: "0.2"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/tags: k8s

tekton.dev/displayName: "trivy"

spec:

description: >-

This task will scan and image for vulerabilities

params:

- name: image

description: image to scan

type: string

steps:

- name: scan

image: trivy

args: ["--exit-code", "0" ,"--severity","MEDIUM,HIGH,CRITICAL","$(params.image)"]

For now I specify that I don’t want to let the pipeline fail in case of a vulnerability so I explicitly set the exit code to 0.

At the end we will make use of the patch-manifest task

apiVersion: tekton.dev/v1beta1

kind: ClusterTask

metadata:

name: patch-manifest

labels:

app.kubernetes.io/version: "0.2"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/tags: k8s

tekton.dev/displayName: "patch manifest"

spec:

description: >-

This task will patch a repository where all manifests are kept in order to trigger a CD event.

params:

- name: manifests-repo-name

description: manifests repo name where all manifests are kept

type: string

- name: manifests-branch-name

description: manifests repo branch where all manifests are kept

type: string

- name: project-name

description: project name

type: string

- name: project-namespace

type: string

description: The group on gitlab

- name: user-name

description: the user that triggered the pipeline

type: string

- name: container-image

description: container image

type: string

default: ""

- name: deployment-path

description: deployment path

type: string

default: ""

- name: project-branch-name

description: project branch name

type: string

default: ""

[OMITTED]

steps:

- name: patch

image: kubectl:release-1.20

script: |

#!/bin/bash

if [[ "$(params.verbose)" == "true" ]] ; then

set -x

fi

DEPLOYMENT_YAML="/var/$(params.subdirectory)/$(params.deployment-path)"

readonly NEW_CONTAINER_NAME=$(params.project-name)

readonly NEW_CONTAINER_IMAGE=$(params.container-image)

readonly PATCH_FILE=patch-file.yaml

if grep -q "kind: CronJob" "$DEPLOYMENT_YAML"; then

cat<<EOF > "/var/$PATCH_FILE"

spec:

jobTemplate:

spec:

template:

metadata:

annotations:

ci-source-branch: $(params.project-branch-name)

ci-last-commit: $(params.commit-sha)

ci-last-build: $(date '+%Y-%m-%d_%H:%M:%S')

ci-user-name: $(params.user-name)

spec:

containers:

- name: $NEW_CONTAINER_NAME

image: $NEW_CONTAINER_IMAGE

EOF

echo "Created Job patch file -> $PATCH_FILE"

else

cat<<EOF > "/var/$PATCH_FILE"

spec:

template:

metadata:

annotations:

ci-source-branch: $(params.project-branch-name)

ci-last-commit: $(params.commit-sha)

ci-last-build: $(date '+%Y-%m-%d_%H:%M:%S')

ci-user-name: $(params.user-name)

spec:

containers:

- name: $NEW_CONTAINER_NAME

image: $NEW_CONTAINER_IMAGE

EOF

echo "Created Deployment patch file -> $PATCH_FILE"

fi

mkdir -p /var/$(params.manifests-repo-name)

cp -r /var/$(params.subdirectory) /var/$(params.manifests-repo-name)/$(params.manifests-branch-name)

kubectl patch -f "$DEPLOYMENT_YAML" -p "$(cat /var/$PATCH_FILE)" --local=true -oyaml > /var/$(params.manifests-repo-name)/$(params.manifests-branch-name)/$(params.deployment-path)_patched.yaml

volumeMounts:

- name: tmp

mountPath: /var

- name: push

image: git:release-2.26.2

script: |

#!/bin/sh

set -x

DEPLOYMENT_PATCHED_YAML="$(params.deployment-path)_patched.yaml"

DEPLOYMENT_YAML="$(params.deployment-path)"

cd /var/$(params.manifests-repo-name)/$(params.manifests-branch-name)

mv $DEPLOYMENT_PATCHED_YAML $DEPLOYMENT_YAML

git pull --quiet origin HEAD:$(params.manifests-branch-name)

git add $DEPLOYMENT_YAML

git commit --quiet -m "

[CI-TEKTON] Updated manifests for $(params.project-name)/$(params.project-branch-name)"

git push --quiet origin HEAD:$(params.manifests-branch-name)

volumeMounts:

- name: tmp

mountPath: /var

volumes:

- name: tmp

emptyDir: {}

This task will update the target yaml on the repository in order to trigger the rollout. This allows to have separate responsabilities: Tekton for the CI and ArgoCD for the CD.

Pipeline CRD

Finally, given the tasks we created above we can compose our pipeline.

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: my-backend-pipeline

namespace: my-project-pipelines

spec:

params:

- name: manifests-branch-name

type: string

description: The manifests branch to clone.

- name: project-namespace

type: string

description: the group on gitlab

- name: project-branch-name

type: string

description: The git branch to clone.

- name: project-repo-url

type: string

description: The git repository URL to clone from.

- name: manifests-repo-url

type: string

description: The manifests repository URL to clone from.

- name: user-name

type: string

description: The user that triggered the pipeline

- name: project-name

type: string

description: The project name

- name: manifests-name

type: string

description: The manifests project name

- name: oci-image-path

type: string

description: The oci image path

- name: commit-sha

type: string

description: The commit sha that triggeret the pipeline

- name: deployment-path

type: string

description: the deployment path

- name: release-tag-prefix

type: string

description: the release tag (if any) to be used

- name: docker-stage-target

type: string

description: specifies what docker stage to execute

workspaces:

- name: workspace-data

description: |

This workspace will receive the cloned git repo and be passed

to the next Task for the repo's README.md file to be read.

- name: maven-settings

description: |

Where maven settings.xml resides

tasks:

- name: clone-project-repo

taskRef:

kind: ClusterTask

name: git-clone

params:

- name: url

value: $(params.project-repo-url)

- name: revision

value: $(params.project-branch-name)

- name: subdirectory

value: $(params.project-namespace)/$(params.project-name)/$(params.project-branch-name)

- name: sslVerify

value: "false"

workspaces:

- name: git-repo

workspace: workspace-data

- name: parse-pom-version

taskRef:

kind: ClusterTask

name: parse-pom-version

runAfter: ["clone-project-repo"]

params:

- name: directory

value: $(params.project-namespace)/$(params.project-name)/$(params.project-branch-name)

workspaces:

- name: git-repo

workspace: workspace-data

- name: maven-settings

workspace: maven-settings

- name: build-image

taskRef:

kind: ClusterTask

name: kaniko

runAfter: ["parse-pom-version"]

params:

- name: revision

value: $(params.project-branch-name)

- name: project-name

value: $(params.project-name)

- name: project-namespace

value: $(params.project-namespace)

- name: IMAGE

value: $(params.oci-image-path)/$(tasks.parse-pom-version.results.artifact-name):$(params.release-tag-prefix)$(tasks.parse-pom-version.results.artifact-version)

- name: ARTIFACT-NAME

value: $(tasks.parse-pom-version.results.artifact-name)

- name: ARTIFACT-VERSION

value: $(tasks.parse-pom-version.results.artifact-version)

- name: CONTEXT-SUB-PATH

value: ./

- name: TARGET

value: "$(params.docker-stage-target)"

- name: cache-repo

value: $(params.oci-image-path)/$(tasks.parse-pom-version.results.artifact-name)

workspaces:

- name: git-repo

workspace: workspace-data

- name: scan-image

taskRef:

kind: ClusterTask

name: trivy

runAfter: ["build-image"]

when:

- input: "$(params.project-name)"

operator: notin

values: ["skip-a-project"]

params:

- name: image

value: $(params.oci-image-path)/$(tasks.parse-pom-version.results.artifact-name):$(params.release-tag-prefix)$(tasks.parse-pom-version.results.artifact-version)

- name: patch-manifest

retries: 5

when:

- input: "$(tasks.parse-pom-version.results.artifact-name)"

operator: notin

values: ["skip-a-project"]

taskRef:

kind: ClusterTask

name: patch-manifest

runAfter: ["build-image"]

params:

- name: container-image

value: $(params.oci-image-path)/$(tasks.parse-pom-version.results.artifact-name):$(params.release-tag-prefix)$(tasks.parse-pom-version.results.artifact-version)

- name: manifests-repo-name

value: $(params.manifests-name)

- name: manifests-branch-name

value: $(params.manifests-branch-name)

- name: deployment-path

value: $(params.deployment-path)

- name: user-name

value: $(params.user-name)

- name: verbose

value: "true"

As you can see I am just composing it based on the tasks we created before, passing down all the parameters from the pipeline to the tasks.

ArgoCD

In order to deploy the changes produced by Tekton on the manifests repo, we will configure an ArgoCD application CRD in order to trigger the rollout each time there is a change on a manifest

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: my-project

namespace: argocd

spec:

destination:

name: target_cluster

namespace: target-namespace

project: my-project

source:

directory:

jsonnet: {}

recurse: true

path: manifests-folder

repoURL: https://my-gitlab-instance.com/mygroup/myproject.git

targetRevision: master

syncPolicy:

automated: {}

Having a central git repository with all the manifests is a huge plus when dealing with microservices on a Kubernetes cluster; by leveraging ArgoCD app of apps pattern we can deploy every change made on the repo to Kubernetes, automatically (or just by clicking on a Sync button).

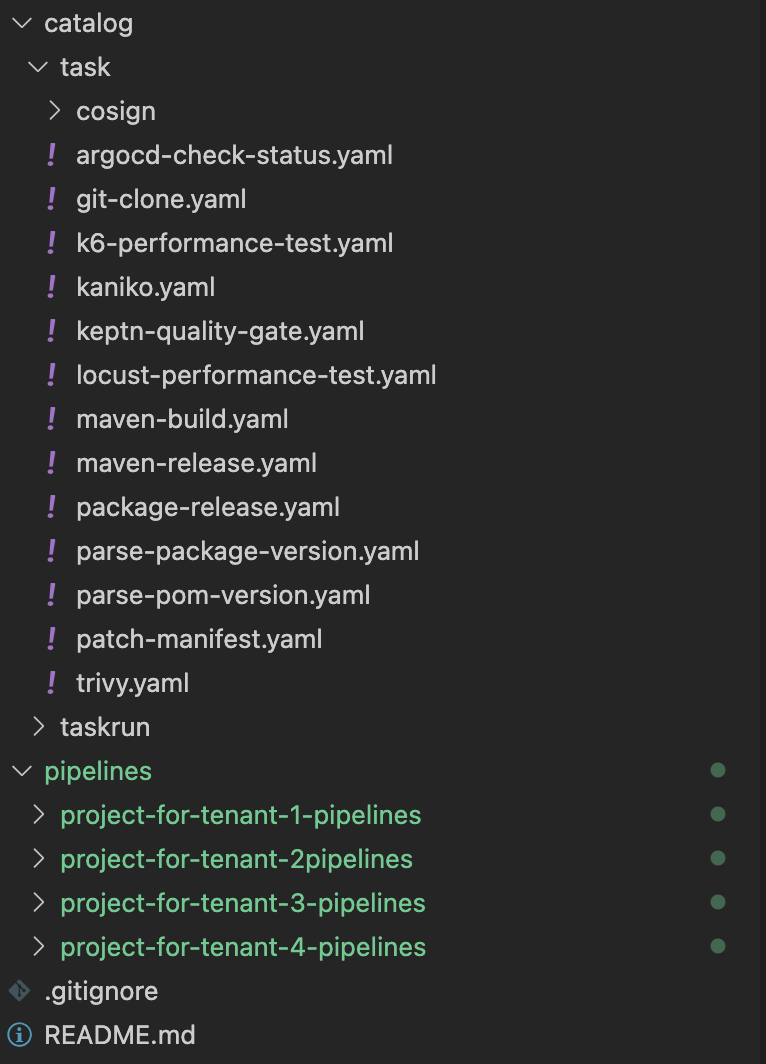

Conclusion

Here I wanted to share the CI/CD pipeline I use to build projects. As I showed you I make use of Tekton for the Continous Integration and ArgoCD to trigger the deployments on a target cluster. Tekton has been my way to go for cloud native pipelines that run on top of Kubernetes, providing me flexibility and allows me to reuse Kubernets clusters for the CI. Eventually I ended up with a repository which contains all the tekton pipelines and the catalog

Managing multiple pipelines for different projects (that we can call them tenants) in this way allows me to have a central Tekton tasks catalog which I reuse in the pipelines that can vary project by project.

As you can see I don’t use an imperative approach (kubectl apply -f deployment.yaml) but instead I follow a GitOps approach by having a centralized manifests repo where ArgoCD is watching for changes.